After a news agency report ProPublica, two United States, raise questions about the encryption used by WhatsApp in cases of people breaking the rules, the app has decided to make changes to its reporting tool.

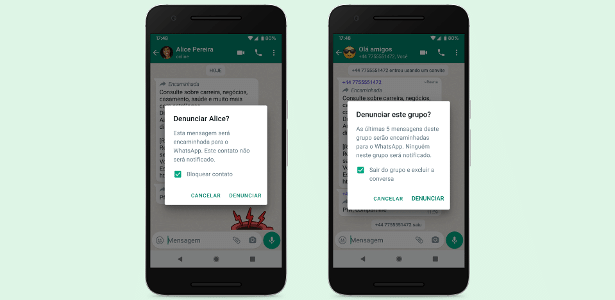

It is now possible to report specific messages to the WhatsApp for infringement of the application’s rules. Before the latest update, you could only report people or entire groups, including the last five messages exchanged in the conversation.

“There have been comments that users sometimes want to report an account for messages they received earlier in the conversation, and this update allows these people to forward annoying messages directly to the WhatsApp“, said the company in the announcement of the news.

To report a single or older message, people can now simply press and hold the message until the “report” option appears and tap on it. By doing this, you’ll still be reporting the person, but you’ll flag that specific message for moderators to look at it more closely.

the controversy

In September, the ProPublica agency revealed that the Facebook has hired, via an outsourced company, more than a thousand “content moderators” for the WhatsApp, just like on other company platforms, such as Facebook and Instagram. Their job is to review messages reported by users.

Contrary to what the article suggested in a version that was later corrected, this work of moderation does not break the end-to-end encryption of the messages.

In order for the conversations to be read by the moderators, the user must make the decision to report that account to WhatsApp and, therefore, agree to send a copy of recent messages for the moderators to evaluate the case.

How the complaint works

When you reported a contact on WhatsApp for having broken the application’s rules, the last five messages in the conversation with that person are sent in decrypted form to WhatsApp engineers and “moderators”.

Next, an artificial intelligence compares the unencrypted data from that account with a bank of “suspicious actions”, such as mass sending messages to several people at the same time, for example.

If a “match” rolls, a WhatsApp moderator is alerted and can choose to look at the messages or not, and then decide whether to put the reported user under surveillance or kick him out of the app.

WhatsApp, meanwhile, says these employees aren’t moderators like Instagram or Facebook because they can’t remotely delete messages.

“Although there is no content moderation on WhatsApp, complaints help combat rarer but much more extreme abuses, such as the sharing of child sexual abuse material. Last year, these complaints allowed 400,000 references to be sent to the National Center for Missing and Exploited Children,” says the company.

–

/cloudfront-eu-central-1.images.arcpublishing.com/madsack/U2JS3RXFOZHCZJOVNWUBAYCJJ4.jpeg)