NVIDIA Research announced an automatic 3D model generation technology called GET3D, which aims to help 3D content creators quickly obtain accurate 3D objects; GET3D uses AI technology to train with 2D images to generate realistic textures and complex 3D geometric objects, and these 3D models can be imported into 3D renderers and game engines for subsequent modifications GET3D can generate content from animals, vehicles, people, buildings , outdoor spaces and even entire cities.

In the future, small game studios or metaverse artists with limited resources can generate rich basic 3D objects with the help of GET3D technology. The 3D artists on the team can focus on rearranging the details of the 3D models of the main characters and create the simple secondary objects Edit to create 3D worlds with rich 3D objects.

The full name of GET3D is 3D Generate Explicit Texuture 3D, which means that GET3D can create various shapes with triangular meshes, just like covering the texture materials in a mud paste model, so the objects generated by GET3D can be imported into the Traditional 3D content creation tools and game engines Make subsequent changes.

The traditional reverse rendering method used to build 3D models of real objects is to build 3D images by shooting multiple 2D views of real objects, but each set of photos can only generate one set of 3D models, which is not efficient. the network is extended and applied, so that it can generate 3D objects while generating 2D images, and finally infer via NVIDIA GPU, which can generate 20 shapes per second.The process is like an artist looking at an image and pinching a model in clay and then color in general.

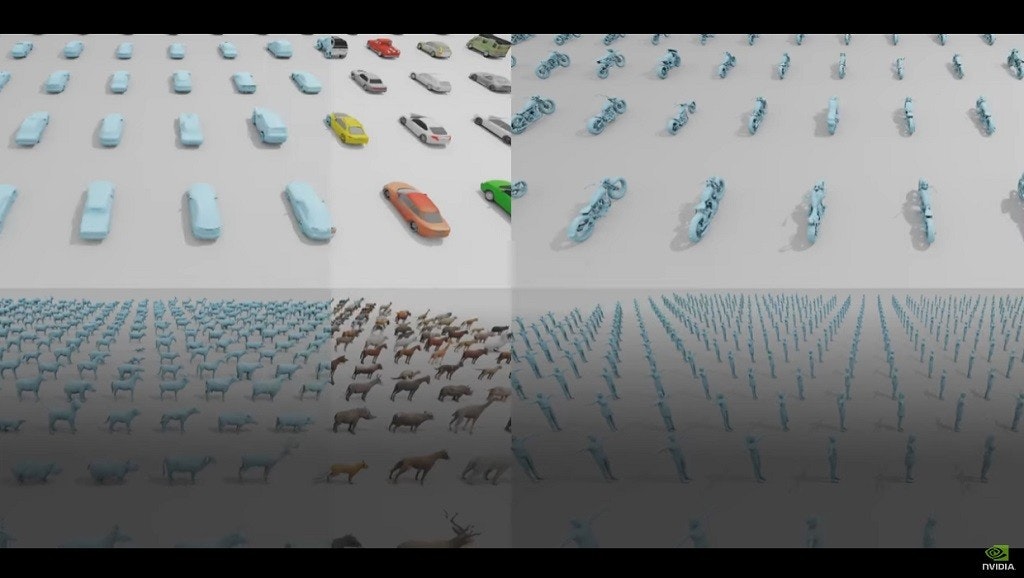

▲ Currently, GET3D can train and generate 3D objects for only one type of 2D images at a time. In the future, it will be able to insert multiple types of content and generate various 3D objects at the same time.

NVIDIA Research trained 2D images of 3D shapes taken from different angles and performed up to 1 million images on the NVIDIA A100 Tensor GPU to complete the GET3D AI model within two days. When the model is completed, enter the 2D car image dataset, GET3D, and it can generate 3D cars, trucks, racing cars and trucks; if modified to 2D animal atlas, it can produce 3D foxes, rhinos, horses and bears; Switch to 2D chair pictures, you can create a variety of 3D swivel chairs, dining chairs and lounge chairs.

This generated content can not only be exported to various drawing applications, but also combined with StyleGAN-NADA, also developed by NVIDIA Research, to add various effects through text descriptions, such as adding decadent effects to 3D home objects. , or is it turning a full 3D car into a scratched or even burned effect.

The research team says that the next version of GET3D can also be combined with camera pose estimation technology to use real environments instead of synthetic data for model training.At the same time, GET3D models will continue to support the technology of general generation, who will be able to use a variety of 3D shapes for training at a time, instead of being able to train with only one type of object at a time.

–

–