Artificial intelligence (AI) technologies continue to advance, and Microsoft has unveiled its Kosmos-1 model, a multi-modal system capable of analyzing images, solving visual puzzles, performing visual text recognition, passing visual IQ tests, and understanding language instructions. natural. What makes Kosmos-1 different from other AI models? Its multimodal approach.

The importance of the multimodal approach

AI experts believe that to achieve artificial general intelligence (AGI) that can perform general tasks on a human level, it is necessary to take a multi-modal approach. This approach involves integrating different input modes, such as text, audio, images, and video, to achieve multimodal perception that simulates human perception.

In the case of Kosmos-1, the researchers describe it as a “multimodal large language model” (MLLM), as it relies on natural language processing just like a text-only LLM model, such as ChatGPT. However, for Kosmos-1 to accept images as input, researchers must first translate the image into a special series of tokens (basically, text) that Kosmos-1 can understand.

The training of Kosmos-1

Microsoft trained Kosmos-1 using data from the web. After training, they assessed Kosmos-1’s abilities in various tests, including language comprehension, language generation, text classification without OCR, image description, visual question answering, web page question answering, and classification. images without prior training. In many of these tests, Kosmos-1 outperformed current cutting-edge models.

Raven’s Visual IQ Test

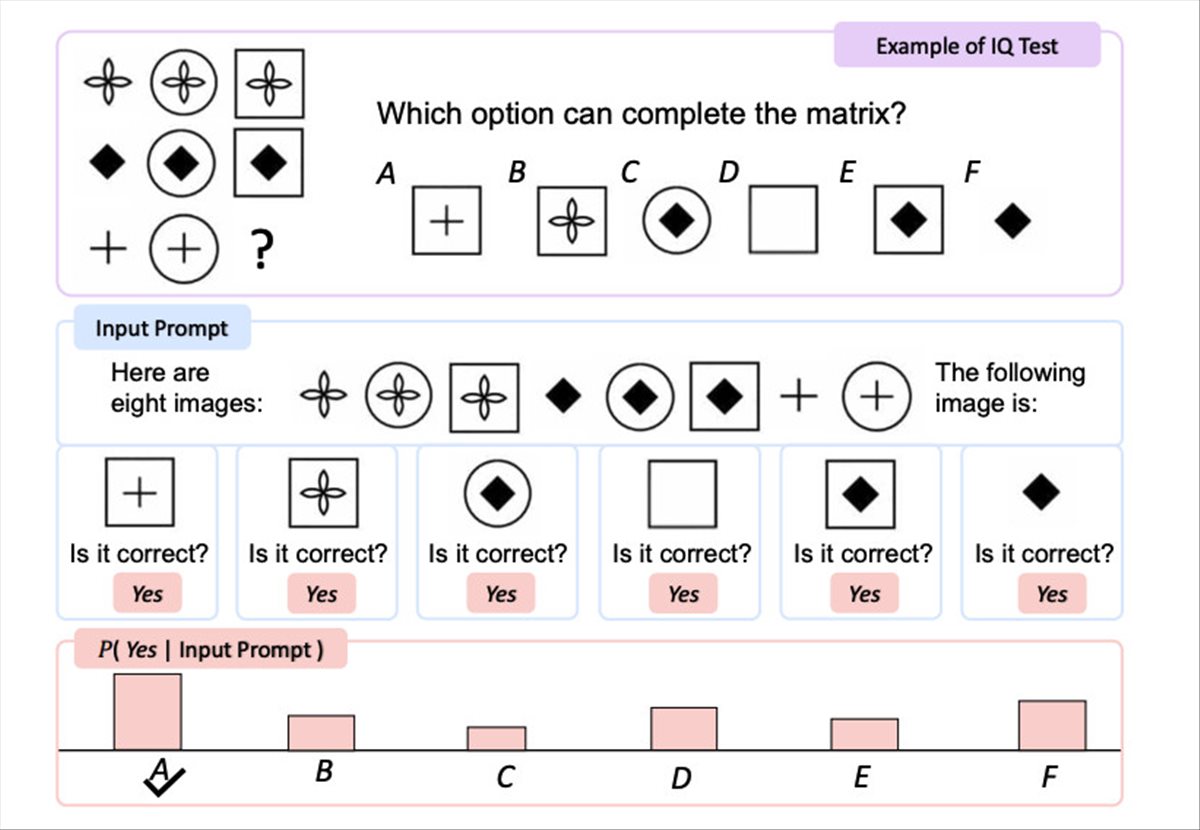

One interesting aspect is the Kosmos-1’s performance on Raven’s Progressive Reasoning Test, which measures visual IQ by presenting a sequence of shapes and asking the test taker to complete the sequence. To test Kosmos-1, the researchers gave it a completed test, one by one, with each option completed, and asked if the answer was correct. Kosmos-1 was only able to answer a Raven test question correctly 22% of the time (26% fine-tuned), but it outperformed chance (17%) on the Raven visual IQ test.

Future improvements in the Kosmos-1 model

Although Kosmos-1 represents the first steps in the multimodal domain (an approach also pursued by others), it is easy to imagine that future optimizations could bring even more significant results, allowing AI models to perceive any form of media and act on it. The researchers state that in the future they would like to scale Kosmos-1 in model size and integrate speech capability.

This multi-modal approach is seen as an important step towards artificial general intelligence (AGI), which can perform general tasks on a human level. Although Kosmos-1 is a purely Microsoft project, its approach aligns with OpenAI’s stated goal of achieving AGI. Microsoft plans to make Kosmos-1 available to developers, which could lead to future enhancements and advancements in multi-modal perception and artificial assistant abilities. With Kosmos-1, AI is getting closer and closer to the ability to perceive and act on the world in the same way that humans do.

More information in arxiv.org y in this PDF.