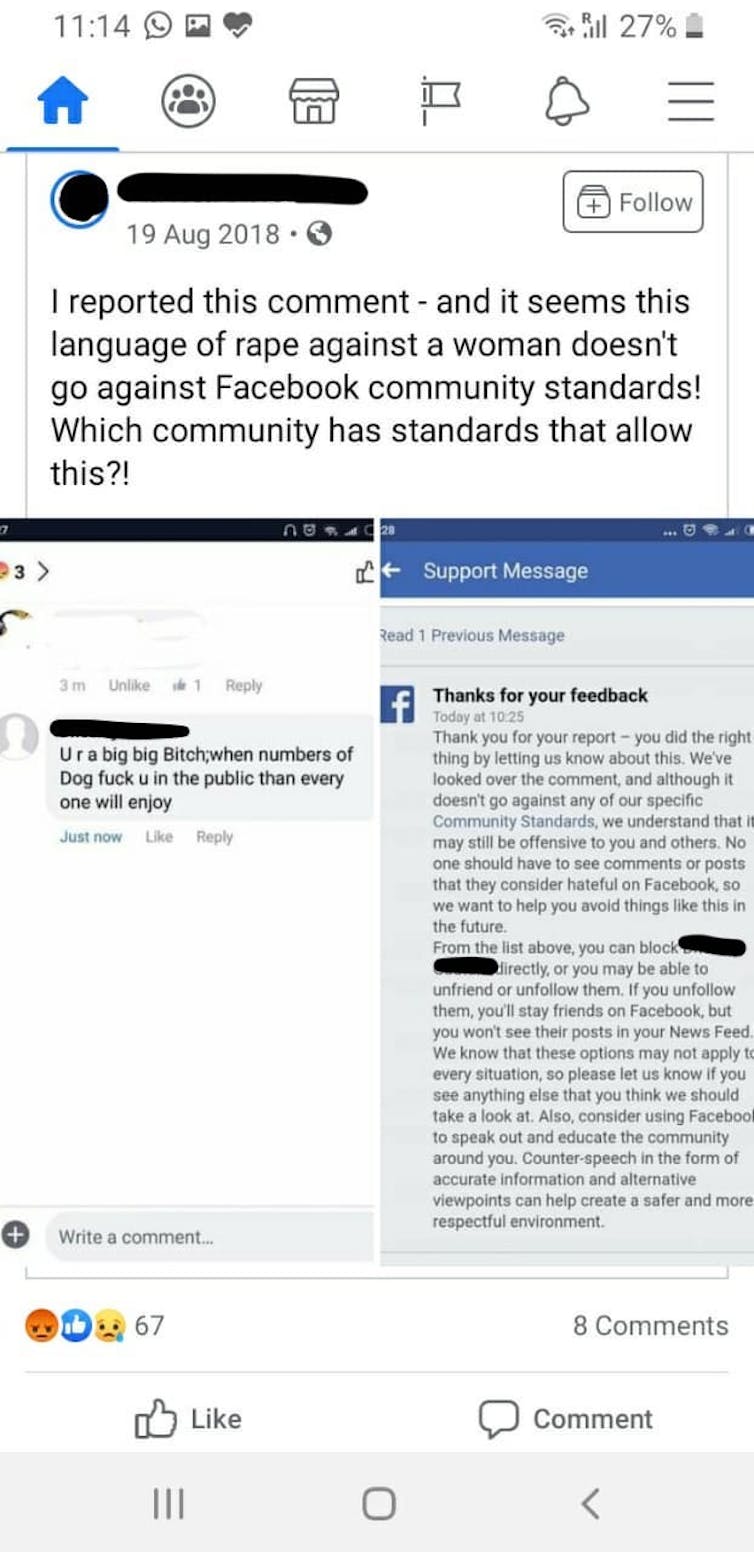

If, like many Australian Muslims, you have reported hate speech on Facebook and received an automatic response saying it does not violate the platform’s community standards, you are not alone.

We and our team are the first Australian sociologists to be funded by Facebook’s Content Policy Research Award, which investigated hate speech on LGBTQI + community sites in five Asian countries: India, Myanmar, Indonesia , the Philippines and

7; Australia.

We looked at three aspects of hate speech regulation in Asia-Pacific over an 18-month period. First, we mapped hate speech law in our case study countries to understand how this issue could be dealt with legally. We also examined whether Facebook’s definition of “hate speech” encompasses all recognized forms and contexts for this disturbing behavior.

Additionally, we mapped Facebook’s content regulation teams and spoke with staff about how company policies and procedures work to identify new forms of hate.

Although Facebook funded our study, it said it could not give us access to a deleted hate speech recording due to privacy concerns. We were therefore unable to test how effectively internal moderators classify hate.

Instead, we collected posts and comments from the top three public LGBTQI + Facebook sites in each country to research hate speech that was ignored by the platform’s artificial intelligence filters or moderators. humans.

Administrators feel abandoned

We asked the administrators of these sites about their experiences with moderating hate and what they think Facebook could do to reduce abuse.

They told us that Facebook would often dismiss their hate speech reports, even if the post clearly violated its community’s standards. In some cases, messages that were originally deleted were reposted on appeal.

Most site administrators said the so-called “flagging” process rarely worked and found it crippling. They wanted Facebook to consult with them more to get a better idea of the types of abuse they see posted and why it constitutes hate speech in their cultural context. Hate speech is not the problem

Facebook has long had an issue with the extent and reach of hate speech on its platform in Asia. For example, while banning certain Hindu extremists, it has kept its pages online.

However, during our study, we were pleased to see that Facebook broadened its definition of hate speech to include a wider range of hate behavior. It also explicitly recognizes that what happens online can trigger violence offline.

It should be noted that in the countries we have focused on, “hate speech” is rarely specifically prohibited by law. We found that other regulations such as cybersecurity laws or religious tolerance could be used to tackle hate speech, but were instead used to quell political disagreements.

We concluded that the problem with Facebook is not how to define hate, it is not being able to identify certain types of hate, such as: B. those in minority languages and regional dialects. It also often does not respond adequately to user reports of hateful content.

Where the hatred was worse

Media reports have shown that Facebook struggles to automatically identify hate in minority languages. It has not provided training materials in local languages to its own moderators, although many are from Asia-Pacific countries where English is not the first language.

In the Philippines and Indonesia in particular, we have found that LGBTIQ + groups face unacceptable levels of discrimination and intimidation. These include death threats, attacks against Muslims and threats of stoning or beheading.

On Indian sites, Facebook filters have failed to capture the vomiting emojis posted in response to gay wedding photos and have denied some very clear defamation reports.

In Australia, on the other hand, we found no unmoderated hate speech – just other types of callous and inappropriate comments. This could indicate that there is less abuse posted or that there is more effective English speaking moderation on the part of the Facebook or page admins.

Similarly, LGBTIQ + groups in Myanmar experienced very little hate speech. However, we are aware that Facebook is working hard to reduce hate speech on its platform there after it was used to persecute the Rohingya Muslim minority.

Moreover, gender diversity is probably not as much of a problem in Myanmar as it is in India, Indonesia and the Philippines. In these countries, LGBTIQ + rights are highly politicized.

Facebook has taken important steps to combat hate speech. However, we are concerned that COVID-19 has forced the platform to rely more on machine moderation. This too, at a time when it can automatically recognize hatred in only about fifty languages - although thousands are spoken in the region every day.

What we recommend

Our report to Facebook contains several key recommendations to improve its approach to tackling hate on its platform. Overall, we have encouraged the company to meet with persecuted groups in the region more regularly so that they can learn more about hate in their local contexts and languages.

This must be done with an increase in the number of their national policy specialists and internal moderators with expertise in minority languages.

Mirroring efforts in Europe, Facebook must also develop and publicize the channel of its trusted partners. This provides visible and official hate speech reporting partner organizations through which people in crisis situations like the attacks on the Christchurch Mosque can report hateful activity directly to Facebook.

In general, we want governments and NGOs to work together to set up a regional process for monitoring hate speech in Asia similar to that organized by the European Union.

Modeled on the EU, such an initiative could help identify pressing trends in hate speech in the region, strengthen Facebook’s local reporting partnerships and reduce the overall spread of hate content on Facebook.

Article by Fiona R Martin, Associate Professor of Convergent and Online Media, University of Sydney and Aim Sinpeng, Senior Lecturer in Government and International Relations, University of Sydney

This article was republished by The Conversation under a Creative Commons license. Read the original article.

–