Meteor Lake with tiled architecture

Hello everyone, my name is Kei Tsukushi. Previously, I worked as an engineer at a semiconductor company. CPUs in computing devices continue to become smaller and more complex as functions other than the CPU are integrated into a single chip. I would like to thoroughly examine the specifications of these cutting-edge CPUs (SoCs) from the perspective of a former semiconductor engineer, and explain them in an easy-to-understand manner to everyone.

In this article, we will explain Intel’s next processor “Meteor Lake (code name)”, of which a lot of information is coming out in preparation for its release at the end of the year.

What Meteor Lake was aiming for and the new method Intel took to achieve it

Let’s start by reviewing Intel’s Meteor Lake development goals. The development goal is to “build the most power-efficient client SoC,” and just from this goal you can feel that it is clearly different from when regular CPUs evolve. This goal seems to include the company’s enthusiasm for thinking about new designs, rather than improving/improving conventional power management to make it more powerful.

Among the technologies adopted in Meteor Lake, Intel explains new features from two major perspectives: “power efficiency” and “Intel Thread Director.”

As a design approach, “we aim to build the most power-efficient client SoC (product for PCs)”, we set a new low-power operating point and improved the previous architecture to achieve the required performance. It took several different breakthroughs.

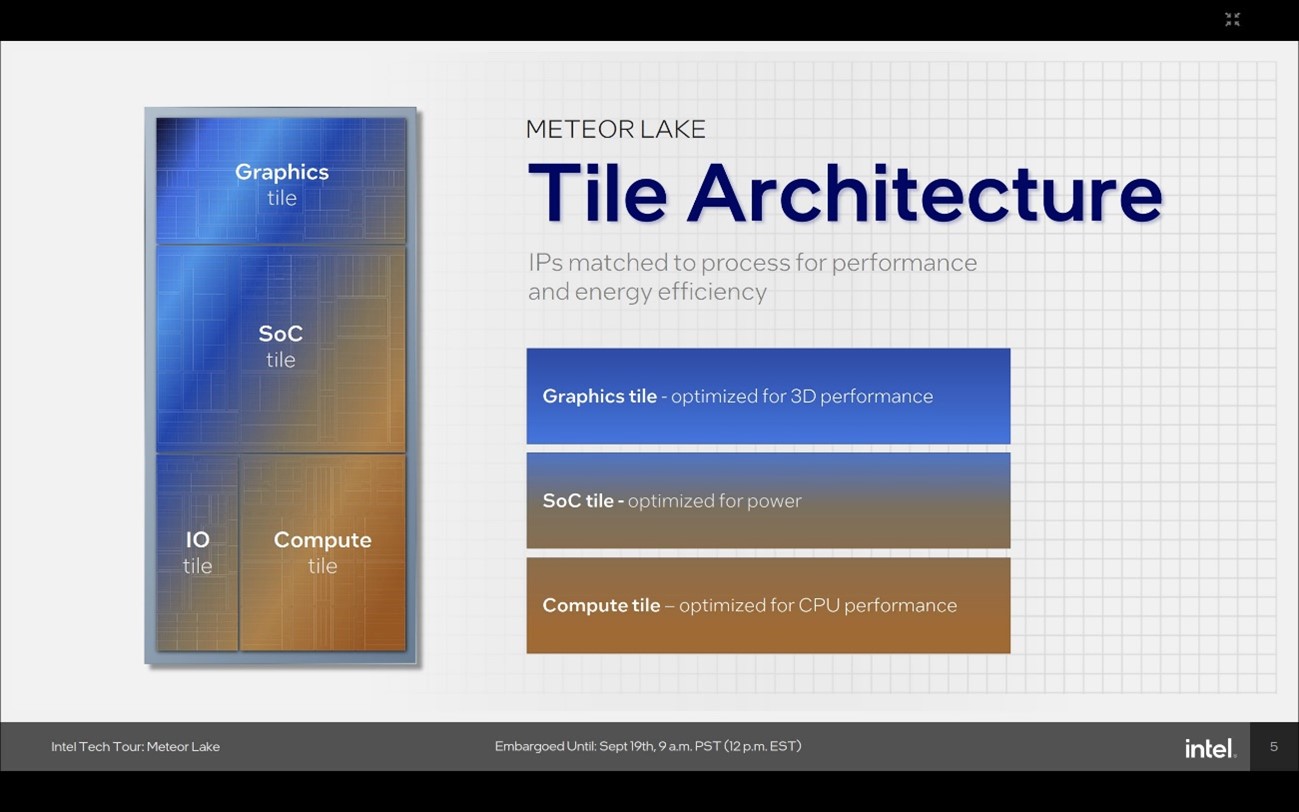

Meteor Lake is built with four unique tiles (what Intel calls Chiplets) connected by industry-leading 3D technology (packaging technology) to achieve next-generation architecture agnostic goals. , made possible by the latest Intel 4 semiconductor manufacturing process technology. This means that this was achieved using a so-called disaggregation architecture (distributed architecture).

Normally, a block integrated into one CPU would be broken down into several parts and then connected on the package. You might think that this is simply a matter of making something similar to a large silicon product using a small piece of silicon, but that’s actually not the case; it’s a very interesting design.

The important thing about the distributed structure of Meteor Lake was to divide the monolithic architecture in an optimal manner and to distribute/partition it to provide flexibility in design. In addition, consideration has been given to matching each divided tile with semiconductor process technology, aiming for high efficiency and performance. A design that takes advantage of the best features that can only be achieved with optimized tiles unique to a distributed architecture has been realized.

Next, let’s look at it from the perspective of power efficiency as a semiconductor. Meteor Lake’s distributed architecture improves power efficiency in each of the four tiles, and allows each tile to be optimized for its workload. The design idea here seems to be to create a distributed architecture that achieves the same efficiency as a monolithic die (a traditional semiconductor product made from a single piece of silicon). Semiconductor designers will know that this is quite a challenge.

Key points for improving power efficiency in Meteor Lake

Intel describes the design of how a distributed architecture behaves like a monolithic die in four parts.

Tile configuration to achieve power efficiency: Partitioning focusing on power management architecture. With four tiles in Meteor Lake, power management can be scaled (adaptive) between each tile. This means that there is a partitioning that allows for flexibility in adjusting platform power and workload management between each tile. Meteor Lake provides centralized power management authority for each tile in a granular manner.

New scalable fabric: A new scalable fabric (a flexible connectivity network between tiles) is key to centrally managing power across the four tiles. This scalable fabric enables both high performance and power efficiency. The fabric has also been enhanced to enable more bandwidth across Meteor Lake and additional traffic between tiles to get the processing power needed.

Distributed architectures Need for a centralized approach to power efficiency optimization: A centralized approach that determines what the workload will be for each app and makes central adjustments on the controllers on the tiles that are partitioned. Achieve power management. This works together to optimize power for each operating point so that each tile can be adjusted in a unified manner.

Software power efficiency optimization: This also seems to be quite important. How Intel Thread Director works to adjust power efficiency at the SoC level for each tile. This allows apps to maximize workloads with the most efficient and maximum performance on each tile in a distributed architecture.

Adopts two E cores in SoC tile

Let’s explain how workloads are handled in a distributed architecture in this power efficiency optimization.

If there are running workloads that the power unit is aware of and does not require peak performance for this workload, the power unit can communicate scheduling hints to the OS, and the OS can schedule those workloads. Schedule a migration from the main CPU tile (compute tile) to the SoC tile (low power island).

Then, we completely turned off the compute tile and migrated the workload to the SoC tile. Extend battery life, enable heat dissipation performance and platform efficiency. This allows workloads moved to the SoC tile to operate normally.

In terms of thermal performance, the amount of heat required to process workloads is lower than that of traditional architectures, giving the platform more performance and responsiveness. This is a fundamental change from the power efficiency approach on traditional platforms.

What you can expect from Meteor Lake-equipped notebooks is longer battery life and better thermal design to make them quieter. During normal work, most of the time the work will be done in low power mode. This provides a margin for heat dissipation performance, provides necessary processing performance from the margin of heat dissipation performance, and provides a comfortable usability including the required responsiveness.

Meteor Lake SoC tiles (low power islands) overview

An important optimization to achieve these goals is the adoption of the E-core, which is optimized for SoC tiles (low-power islands). This will be different from the Compute Tile E-Core. I would like to explain a little more about this SoC tile.

The SoC tile has a large number of IP blocks that allow you to perform various tasks on the SoC tile. Two E-cores are implemented to handle a wide range of workloads, but these E-cores are unique in that they can operate while other tiles are in low-power mode or completely powered off. .

The next important thing was to focus on what we could do with these two E-cores. This is important because some workloads do not require additional performance. In other words, it can be said that we have prepared a mechanism to increase the workload that can be processed by the E core on the SoC tile as much as possible.

As a result, compute tiles can be quickly turned back on as the workload demands, leaving the P and E cores on the compute tile ready for immediate use. When a new thread for some task requires more performance than the SoC tile’s E-cores can handle, the compute tile can be quickly turned on to provide the required processing power without having to trade off performance for battery life. can correspond to

The key point of Meteor Lake is that this architecture allows it to achieve both unprecedented low power consumption and high performance.

AI engine also integrated into tiles

Another important point is the integration of the AI engine into the SoC tile (low power island). Initially, Meteor Lake was expected to be Intel’s first SoC to integrate AI. When I heard this story, I couldn’t really imagine a scenario in which an AI engine would be used on a PC. However, recently, the number of situations in which AI is used has increased considerably, so the idea of integrating an AI engine was a prediction of the future.

Intel’s analysis seems to predict that AI will be used in a variety of applications and uses. Moreover, some of these workloads are persistent workloads, which will become very important in the future and have various usage applications.

To address these situations, Meteor Lake integrates the AI engine into the SoC tile, increasing the workloads that the SoC tile can handle and enabling it to function in a wide range of scenarios while achieving high efficiency.

Processing AI workloads with an energy-saving AI engine instead of processing them with the CPU/GPU provides an environment where you can use continuous/continuous AI workloads without worrying about power consumption and heat generation. will do.

My personal impression is that Intel’s AI PC is beyond my imagination, and I’m looking forward to seeing the way AI workloads are used on PCs evolve significantly.

A wide range of IP can operate even when the P core and E core are off.

The next important item is to support a wide range of IP, which is the purpose of SoC tiles (low-power islands). By placing a wide range of IP on a SoC tile, you can completely turn off the main compute (Compute tile) P cores & E cores on the compute tile, while still providing all the processing needed with the resources of the SoC tile. It provides functionality and allows you to run a variety of workloads.

This allows the SoC tile to support camera, display, and many other IP features that are key around the media block. This leads to significant enhancements to Meteor Lake’s hybrid architecture.

Now, let’s look at the workload transition from the perspective of the E core (hereinafter referred to as Low Power E core, or LP E core), P core, and E core on the SoC tile.

Operating status of each core when IT background tasks are running

Background tasks, especially IT background tasks, are constantly running and consume a significant amount of power. Meteor Lake focuses on processing these tasks.

This diagram shows that in Meteor Lake, IT workloads are running on low-power islands, while areas of the SoC tile other than the LP E cores, E cores, and P cores on the compute tile are completely turned off. (The areas of the SoC tile that appear dark blue are operating, and the rest are off or in low power mode.)

This can be said to be one of the points of interest in the power optimization aimed at with Meteor Lake, which was not possible with conventional architectures. For these workloads, Intel’s demo showed 20 to 30 tasks running in the background in Task Manager. One core is required to perform one task.

When a user starts working, their tasks are processed in addition to this background task. Tasks such as viewing web pages were handled by the LP-E core, and tiles and circuits not needed by the LP-E core could be turned off or placed into a low-power mode. In the subsequent demo, video playback was also performed on the LPE core.

We will also briefly introduce the features of other hardware functions.

Other features

Integrated DLVR (digital linear voltage regulator)

Meteor Lake will be Intel’s first product to use fully integrated DLVR. In order to optimize each IP, it is possible to find a voltage control point for each IP and have its own power supply.

Optimum power supply is provided not only to divided tiles, but also to each IP within the SoC tile. Optimal power supply seems to mean supplying the voltage/power that produces the best performance in terms of performance, and supplying power at the point where the most power is saved in terms of power saving.

It also has a design that is quite advanced from previous architectures, such as being able to dynamically adjust it for each workload. This DLVA has improved battery life, improved load efficiency, reduced power overhead, and improved the CPU package. We can also expect products after Meteor Lake.

Dynamic Fabric Frequency

Dynamic Fabric Frequency and memory fabric are also possible. The other I/O fabrics then adjust their bandwidth as needed to ensure the desired performance. It says it is now operating in the most efficient manner possible.

SoC algorithm

It can dynamically recognize workloads and adjust each SoC block to maximize performance and power efficiency. IP blocks can be turned on and off if there are unused blocks. This makes it possible to allocate a fixed amount of power budget to each IP block. For example, if your workload requires graphics performance, you can allocate more power to the graphics IP.

Scheduling Enhancements

In order to realize all of these features of Meteor Lake, it will be necessary to strengthen the scheduling function. The workload scheduler has several enhancements. This applies to all work done with Intel Thread Director. You need to make sure that the right threads are being processed on the right cores for the best efficiency across the platform and good performance with good power efficiency.

I’ll explain how Intel Thread Director can help you extend your scheduling capabilities in the next article.

In summary, when it comes to Meteor Lake’s power saving features and low power efficiency, the SoC tile is very important in terms of hardware, and it enables things that couldn’t be achieved with previous CPUs, making it possible for Intel CPUs to play a major role in the future. This can be said to be the starting point for further evolution.