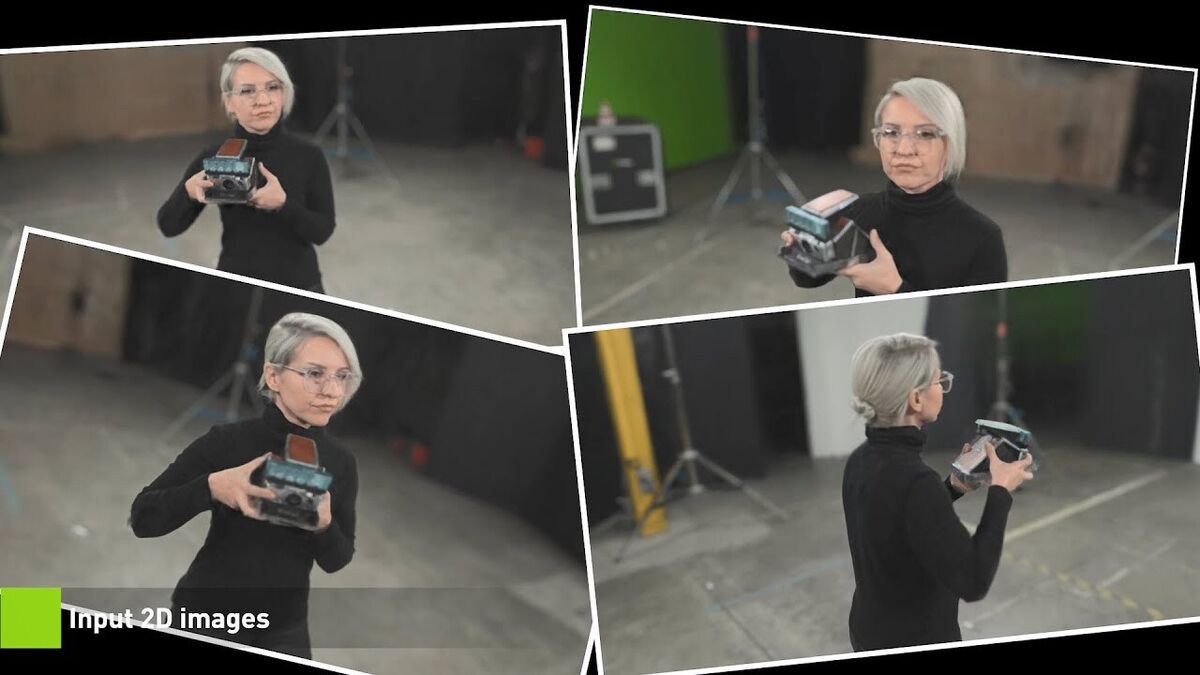

During an event held within the framework of GTC 2022, the NVIDIA research team has revealed an impressive technology called “Instant NeRF”, which can transform 2D photos into 3D scenes in just a few seconds. To do this, the team of specialists from the North American house takes advantage of the power of Artificial Intelligence, specifically that generated through the so-called neuronal radiation fields.

NeRFs rely on neural networks to reconstruct each scene from the images, predicting the color of light radiating in any direction. Its managers assure that it is the fastest technology in its segment, which allows an acceleration of more than 1000x in some cases, so that 1080p playback is carried out in milliseconds. Lead researcher Thomas Muller explains that this compound effect is the result of three major improvements:

Task-specific GPU implementation of the rendering/training algorithm, which uses the fine-grained control flow capabilities of the GPU to be much faster.

Implementation of a small neural network, which is faster than general purpose matrix multiplication routines.

Lastly, a proprietary encoding technique called multiresolution hash grid, which is independent and obtains a better speed/quality ratio.

As expected, the “Instant NeRF” model is developed using the CUDA Toolkit and the Tiny CUDA Neural Networks library. If traditional 3D representations like polygon meshes are similar to vector images, NeRFs are like bitmap images: They densely capture the way light radiates from an object or within a scene.

In that sense, it may be as important to 3D as digital cameras and JPEG compression were to 2D photography, increasing the speed, ease, and scope of 3D capture and sharing. Applications for this technology can be many and varied, from quickly scanning real people or environments to use their designs, to implantation in autonomous robots to reinforce the perception of shape and size of real objects.

–